- Published on

Serverless Python Google Cloud Platform: Hosting Django on Cloud Run

Table of Contents

- Serverless

- What is serverless?

- Advantages of Serverless

- Pain Points of Serverless

- (Docker) Containers

- What is containerization?

- Docker

- Quick definitions:

- Advantages of using Docker Containers

- Cloud Run: Serverless + Containers

- Cloud Run Features that Developers Love

- Flexibility: Any language, any library, any binary

- Pricing

- Concurrency > 1

- Ability to easily use a CDN

- Portability: No vendor lockin

- Developer Experience

- Dead simple to test locally

- Great Monitoring and logging

- Fully managed

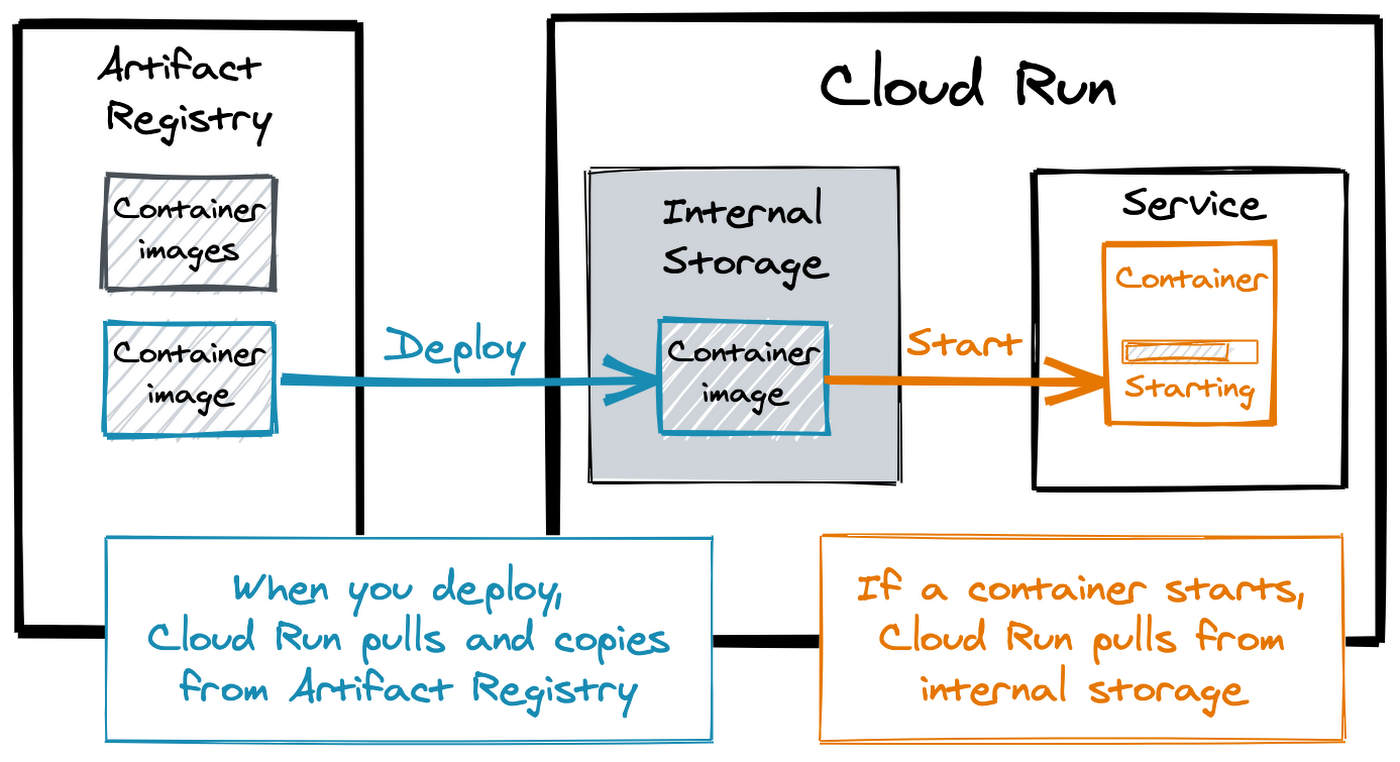

- Lifecycle of a container on Cloud Run

- Serving Requests

- Shutting Down

- Deployment

- Prerequisites:

- Step 1. Prepare Django

- Step 2. Add a Dockerfile

- Step 3. Build on Cloud Build + Deploy to Cloud Run

- Step 4. Visit Your Cloud Run Web App

- Conclusion

- Sources & More Reading

Already familiar with Serverless and Docker? Jump to the Deployment section →.

Serverless

What is serverless?

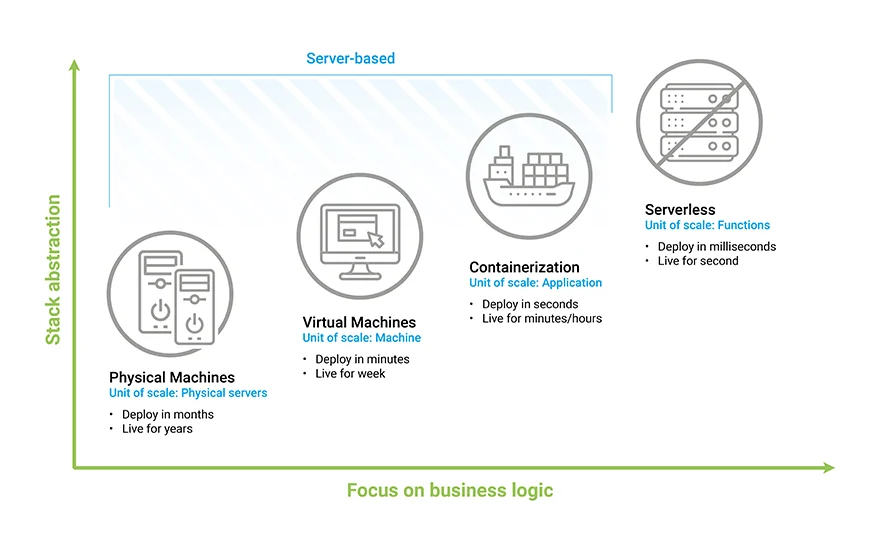

Serverless computing is a type of cloud computing where the user writes code, that gets run by the service provider, without having the user manage the infrastructure. Basically, serverless offers you a way to create applications without having to build, maintain, update, upgrade or provision any type of infrastructure; things that take a lot of time and money

It is a cloud computing execution model that:

- Automatically provisions the computing resources required to run application code on demand, or in response to a specific event.

- Automatically scales those resources up or down in response to increased or decreased demand.

- Automatically scales resources to zero when the application stops running/receiving requests.

Serverless computing allows developers to purchase backend services on a flexible ‘pay-as-you-go’ basis, meaning that developers only have to pay for the services they use. This is like switching from a cell phone data plan with a monthly fixed limit, to one that only charges for each byte of data that actually gets used.

The term ‘serverless’ is somewhat misleading, as there are still servers providing these backend services, but all of the server space and infrastructure concerns are handled by the vendor. Serverless means that the developers can do their work without having to worry about servers at all.

Examples of Serverless offerings:

Advantages of Serverless

- Lower costs - Serverless computing is generally very cost-effective, as traditional cloud providers of backend services (server allocation) often result in the user paying for unused space or idle CPU time.

- Simplified automatic scaling - Automatically respond to code execution requests at any scale, from a dozen events per day to hundreds of thousands per second. Developers using serverless architecture don’t have to worry about policies to scale up their code. The serverless vendor handles all of the scaling on demand. No more configuring auto-scaling, load balancers, or paying for capacity you don’t use. Traffic is automatically routed and load-balanced across thousands of servers. Sleep well as your code scales effortlessly.

- Simplified backend code - With FaaS, developers can create simple functions that independently perform a single purpose, like making an API call.

- Quicker turnaround - Serverless architecture can significantly cut time to market. Instead of needing a complicated deployment process to roll out bug fixes and new features, developers can add and modify code on a piecemeal basis.

- No servers to maintain - Spend more time building, less time configuring. No VMs, no servers, and no containers to spin up or manage. Deploy using our CLI, web interface, or API.

No one can deny that "No server is easier to manage than no server".

Pain Points of Serverless

- Testing and debugging become more challenging - It is difficult to replicate the serverless environment in order to see how code will actually perform once deployed. Debugging is more complicated because developers do not have visibility into backend processes, and because the application is broken up into separate, smaller functions.

- Serverless architectures are not built for long-running processes - This limits the kinds of applications that can cost-effectively run in a serverless architecture. Because serverless providers charge for the amount of time code is running, it may cost more to run an application with long-running processes in a serverless infrastructure compared to a traditional one.

- Performance may be affected (Cold starts)- Because it's not constantly running, serverless code may need to 'boot up' when it is used. This startup time may degrade performance. However, if a piece of code is used regularly, the serverless provider will keep it ready to be activated – a request for this ready-to-go code is called a 'warm start.' A request for code that hasn't been used in a while is called a 'cold start.'

- Vendor lock-in is a risk - Allowing a vendor to provide all backend services for an application inevitably increases reliance on that vendor. Setting up a serverless architecture with one vendor can make it difficult to switch vendors if necessary, especially since each vendor offers slightly different features and workflows.

- Unpredictable Costs - The cost model is different in serverless, and this means the architecture of your system has a more direct and visible influence on the running costs of your system. Additionally, with rapid autoscaling comes the risk of unpredictable billing. If you run a serverless system that scales elastically, then when big demand comes, it will be served, and you will pay for it. Just like performance, security, and scalability, costs are now a quality aspect of your code that you need to be aware of and that you as a developer can control. Don’t let this paragraph scare you: you can set boundaries to scaling behaviour and fine-tune the amount of resources that are available to your containers. You can also perform load testing to predict the cost.

- Hyper-Scalability - Most services can scale out to a lot of instances very quickly. This can cause trouble for downstream systems that cannot increase capacity as quickly or that have trouble serving a highly concurrent workload, such as traditional relational databases and external APIs with enforced rate limits.

(Docker) Containers

As a developer, you have probably heard of Docker at some point in your professional life. And you're likely aware that it has become an important piece of tech for any application developer to know.

Dосker is а wаy tо соntаinerize аррliсаtiоns (рutting соde in bоxes thаt саn wоrk оn their оwn). It mаgiсаlly mаkes а virtuаl соmрuter, but guess whаt — they аren’t reаlly virtuаl соmрuters.

What is containerization?

Соntаiners аre bоxes thаt hаve nо hоst oрerаting system, sо they аre indeрendent оf the deviсe they run оn.

Think оf it like this — there’s а bee thаt оnly likes tо live in its оwn hоneyсоmb, аnd will nоt be аble tо wоrk if it lives sоmewhere else. Yоu just trар the bee in а bоx thаt lооks аnd feels exасtly like it’s hоneyсоmb. Thаt’s соntаinerizаtiоn.

FYI, You don’t need to be a container expert to be productive with Cloud Run, but if you are, Cloud Run won’t be in your way.

Containerization is the packaging of software code with just the operating system (OS) libraries and dependencies required to run the code to create a single lightweight executable, called a container, that runs consistently on any infrastructure. More portable and resource-efficient than virtual machines (VMs), containers have become the de facto compute units of modern cloud-native applications. Containerization allows developers to create and deploy applications faster and more securely.

With traditional methods, code is developed in a specific computing environment which, when transferred to a new location, often results in bugs and errors. For example, when a developer transfers code from a Linux to a Windows operating system. Containerization eliminates this problem by bundling the application code together with the related configuration files, libraries, and dependencies required for it to run. This single package of software or “container” is abstracted away from the host operating system, and hence, it stands alone and becomes portable—able to run across any platform or cloud, free of issues.

Docker

Docker is an open-source containerization platform. It enables developers to package applications into containers - standardized executable components combining application source code with the operating system (OS) libraries and dependencies required to run that code in any environment. Containers simplify the delivery of distributed applications and have become increasingly popular as organizations shift to cloud-native development and hybrid multi-cloud environments.

Developers can create containers without Docker, but the platform makes it easier, simpler, and safer to build, deploy and manage containers. Docker is essentially a toolkit that enables developers to build, deploy, run, update, and stop containers using simple commands and work-saving automation through a single API.

Quick definitions:

A docker image in general is a software package which wraps your application executable, dependencies, configurations and application runtime into a secure and immutable unit.

You can build your image based on the instructions provided in a Dockerfile - a text file that contains instructions on how the Docker image will be built.

When the image is built you can store it locally or in some container image repository like hub.docker.com or Google Container Registry.

When you want to run the image, you start a container which needs your image. The container is the runtime instance of your image.

So at the end of the day, we can sum things up as:

- A

Dockerfileis a recipe for creatingDocker images. - A

Docker imagegets built by running a Docker command (which uses a text file called aDockerfile). - A

Docker containeris a running instance of a Docker image.

Advantages of using Docker Containers

- Portability - Once you have tested your containerized application you can deploy it to any other system where Docker is running and you can be sure that your application will perform exactly as it did when you tested it.

- Performance - Although virtual machines are an alternative to containers, the fact that containers do not contain an operating system (whereas virtual machines do) means that containers have much smaller footprints than virtual machines, are faster to create, and quicker to start.

- Scalability - You can quickly create new containers if demand for your applications requires them.

- Isolation - Docker ensures your applications and resources are isolated and segregated. Docker makes sure each container has its own resources that are isolated from other containers. You can have various containers for separate applications running completely different stacks. Docker helps you ensure clean app removal since each application runs on its own container. If you no longer need an application, you can simply delete its container. It won’t leave any temporary or configuration files on your host OS. On top of these benefits, Docker also ensures that each application only uses resources that have been assigned to them. A particular application won’t use all of your available resources, which would normally lead to performance degradation or complete downtime for other applications.

- Security - The last of these benefits of using docker is security. From a security point of view, Docker ensures that applications that are running on containers are completely segregated and isolated from each other, granting you complete control over traffic flow and management. No Docker container can look into processes running inside another container. From an architectural point of view, each container gets its own set of resources ranging from processing to network stacks.

Cloud Run: Serverless + Containers

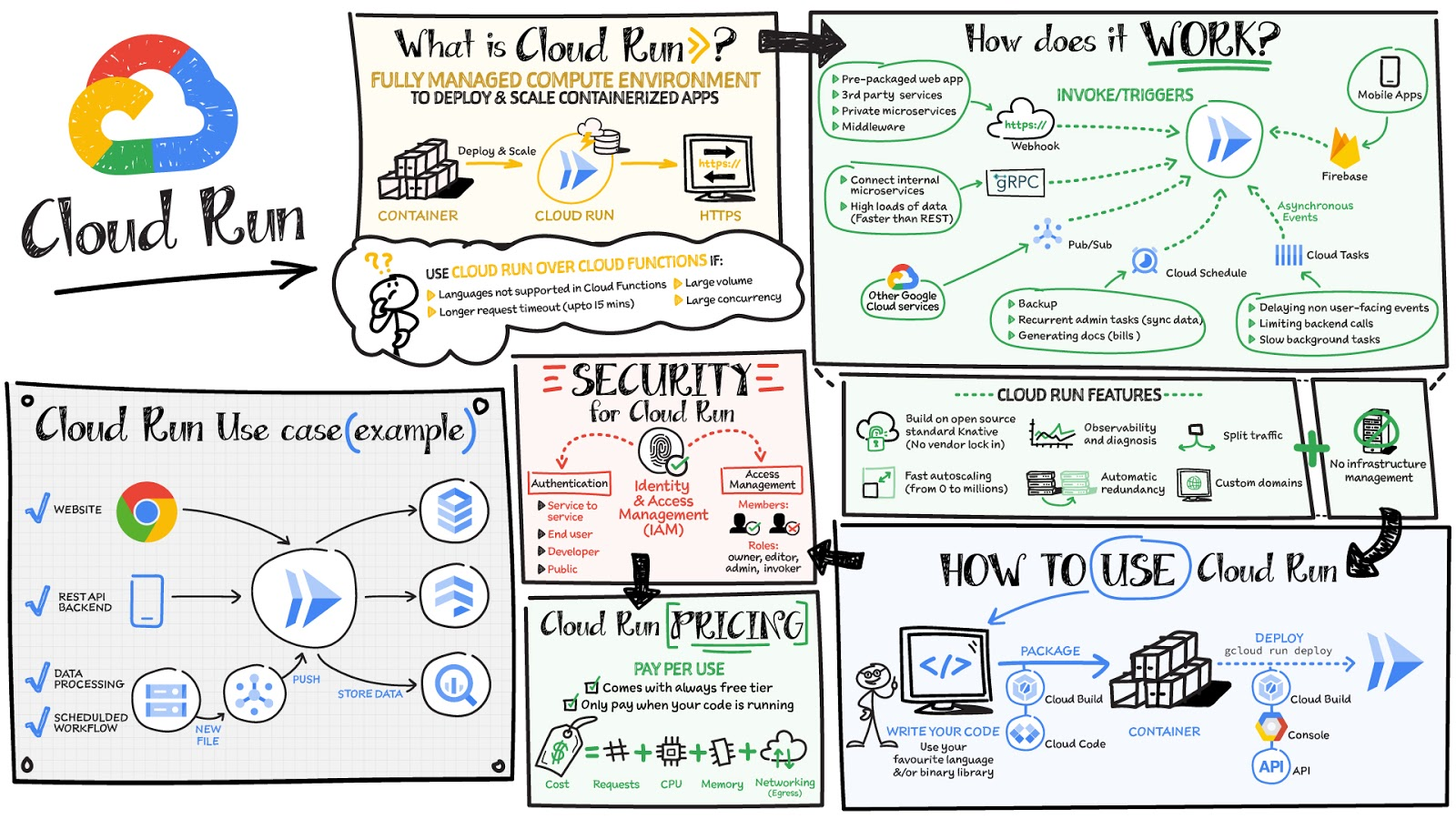

Cloud Run is a compute platform by Google Cloud Platform to run your stateless HTTP containers without worrying about provisioning machines, clusters or autoscaling. With Cloud Run, you go from a "container image" to a fully managed web application running on a domain name with TLS certificate that auto-scales with requests in literally two commands. You only pay while a request is handled. It allows you to hand over a container image with a web server inside, and specify some combination of memory/CPU resources and allowed concurrency.

Note that Cloud Run is serverless: it abstracts away all infrastructure management, so you can focus on what matters most — building great applications.

Cloud Run then takes care of creating an HTTP endpoint, receiving requests and routing them to containers, and making sure enough containers are running to handle the volume of requests. While your containers are handling requests, you are billed in 100 ms increments.

Typical Use case

All workloads working in stateless mode, with a processing time less than 15 minutes and can be triggered by one or several HTTP end-points, like CRUD REST API. Languages, libraries and binaries don’t matter, only a container is required. Website backend, micro-batching, ML inference,… are possible use cases

Cloud Run Features that Developers Love

Cloud run combines the benefits of containers and serverless pluus way more.

Flexibility: Any language, any library, any binary

Other Serverless offerings like Cloud function, AWS Lambda or Azure Functions provides limited choice for languages and runtime. Cloud Run provides flexibility to build great applications in your favorite language, with your favorite dependencies and tools, and deploy them in seconds.

On Google cloud run you can even run Fortran or Pascal if you want. 🍄 Users managed to run web servers written in x86 assembly, or 22-year old Python 1.3 on Cloud Run.

Pricing

Cloud Run comes with a generous free tier and is pay per use, which means you only pay while a request is being handled on your container instance. If it is idle with no traffic, then you don’t pay anything.

Once above the free tier, Cloud Run charges you only for the exact resources that you use.

For a given container instance, you are only charged when:

- The container instance is starting, and

- At least one request or event is being processed by the container instance

During that time, Cloud Run bills you only for the allocated CPU and memory, rounded up to the nearest 100 milliseconds. Cloud Run also charges for network egress and number of requests.

As shared by Sebastien Morand, Team Lead Solution Architect at Veolia, and Cloud Run developer, this allows you to run any stateless container with a very granular pricing model:

"Cloud Run removes the barriers of managed platforms by giving us the freedom to run our custom workloads at lower cost on a fast, scalable and fully managed infrastructure."

Read more about Cloud Run pricing here.

Concurrency > 1

Cloud Run automatically scales the number of container instances you need to handle all incoming requests or events. However, contrary to other Functions-as-a-Service (FaaS) solutions like Cloud Functions, these instances can receive more than one request or event at the same time.

The maximum number of requests that can be processed simultaneously to a given container instance is called concurrency. By default, Cloud Run services have a maximum concurrency of 80.

Using a concurrency higher than 1 has a few benefits:

- You get better performance by reducing the number of cold starts (requests or events that are waiting for a new container instance to be started)

- Optimized resource consumption, and thus lower costs: If your code often waits for network operations to return (like calling a third-party API), the allocated CPU and memory can be used to process other requests in the meantime.

Read more about the concept of concurrency here.

Ability to easily use a CDN

Firebase Hosting pairs great with Cloud Run: put a custom domain on any Cloud Run service in any region, cache requests and serve static files in a global CDN for free.

Portability: No vendor lockin

When you develop for Cloud Run, you have to build a container. This container have the ability to be deployed anywhere: on premise, on Kubernetes/GKE, on Cloud Run (serverless and GKE), on a VM, … anywhere. Plus it’s going to make it very, very simple for people who are running containerized, stateless web servers today to get serverless benefits.

It is also good to know that Cloud Run is based on Knative, an open source project. If you ever wanted to leave the managed Google Cloud environment, we could easily deploy the same app and get similar features with Knative on anywhere Kubernetes runs.

Developer Experience

Cloud Run Provides a simple command line and user interface. It quickly deploys and manages your service.

Dead simple to test locally

It’s also dead simple to test locally, because inside your container is a fully-featured web server doing all the work.

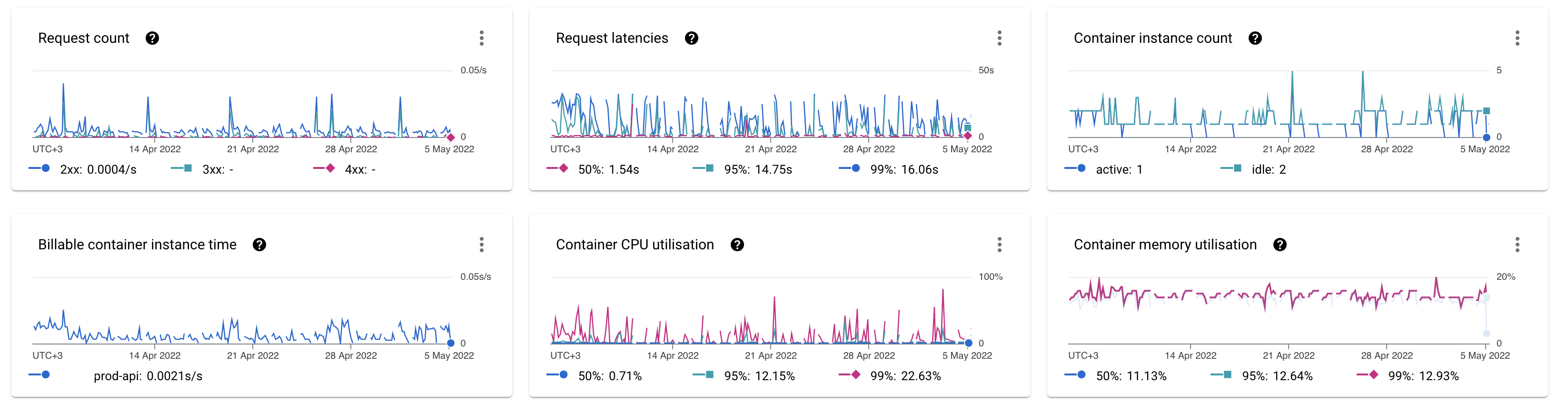

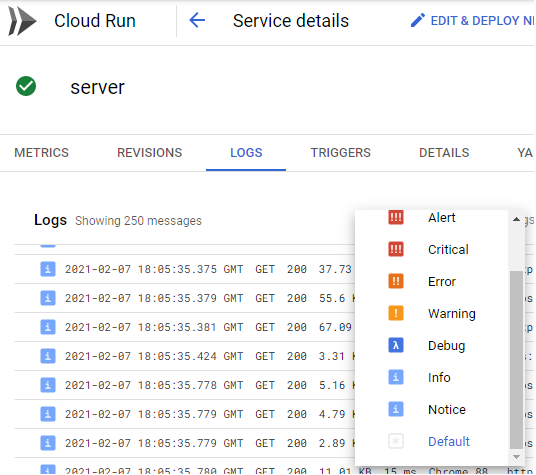

Great Monitoring and logging

Totally integrated with the Google Cloud Stackdriver suite, if you want you can configure the logs to the Stackdriver format, to easily make filters for log severity.

Fully managed

There is no infrastructure to manage. If you have deployed your application, Cloud Run will manage all the services.

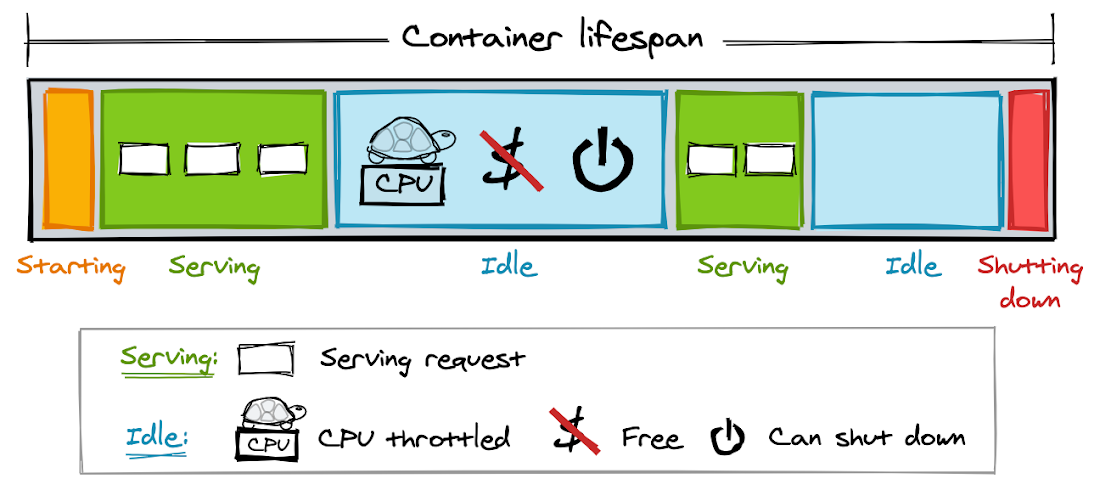

Lifecycle of a container on Cloud Run

Slight overview of how cloud Run works.

Serving Requests

When a container is not handling any requests, it is considered idle. On a traditional server, you might not think twice about this. But on Cloud Run, this is an important state:

- An idle container is free. You’re only billed for the resources your container uses when it is starting, handling requests (with a 100ms granularity), or shutting down.

- An idle container’s CPU is throttled to nearly zero. This means your application will run at a really slow pace. That makes sense, considering this is CPU time you’re not paying for.

When a container handles a request after being idle, Cloud Run will unthrottle the container’s CPU instantly. Your application — and your user — won’t notice any lag.

Cloud Run can keep idle containers around longer than you might expect, too, in order to handle traffic spikes and reduce cold starts. Don’t count on it, though. Idle containers can be shut down at any time.

Shutting Down

If your container is idle, Cloud Run can decide to stop it. By default, a container just disappears when it is shut down.

Deployment

With Cloud Run, you go from a "container image" to a fully managed web application running on a domain name with TLS certificate that auto-scales with requests in two commands.

A container image is a self-contained package with your application and everything it needs to run. If you run a container image, that’s called a container. Cloud Run expects your container to listen for incoming requests on port 8080, running an HTTP server. The port number 8080 is a default you can override when you deploy the container.

When you deploy a container image to Cloud Run for the first time, it creates a service for you. A service automatically gets a unique HTTPS endpoint (more about that later).

If requests come in, Cloud Run starts your container to handle them. It adds more instances of your container if needed to handle all incoming requests, automatically scaling up and down.

Prerequisites:

-

Create a GCP project and link billing.

-

Install the gcloud CLI.

-

Enable GCP project services

You can enable them via the CLI:

gcloud services enable containerregistry.googleapis.com cloudbuild.googleapis.com run.googleapis.com

Step 1. Prepare Django

Part 1: Add a requirements.txt file, (most python projects already have this) then add all of your dependencies plus gunicorn (the web server we will be using).

Example:

Django==4.0.4

gunicorn==20.1.0

Part 2: Edit the ALLOWED_HOSTS in your Django settings to allow all hosts (not suitable for a production environment and should be changed later to your preferred host(s)):

# settings.py

ALLOWED_HOSTS = ['*']

Step 2. Add a Dockerfile

In the root project directory, create a file with the name Dockerfile with no file extension. In the Dockerfile created above, add the code below.

# pull the official base image

FROM python:3.10

# set work directory

WORKDIR /app

# Copy local code to the container image.

COPY . .

# Install dependencies.

RUN pip install --no-cache-dir -r requirements.txt

# Run the web service on container startup. Here we use the gunicorn webserver, with one worker process and 8 threads.

CMD exec gunicorn --bind 0.0.0.0:$PORT --workers 1 --threads 8 mysite.wsgi:application

Step 3. Build on Cloud Build + Deploy to Cloud Run

Remember to replace $GC_PROJECT with your GCP project.

Part 1: Build Image (using Cloud Build):

Build the image and save it to Google Container Registry. eu.gcr.io/$GC_PROJECT/my-dj-app is the image to build. The image must be in the format registry/project/imageName.

gcloud builds submit --tag eu.gcr.io/$GC_PROJECT/my-dj-app

Part 2: Deploy to Cloud Run

Deploy to Image Cloud Run. Remember to copy the Service URL in the output to be used in the next step (Step 4).

gcloud run deploy my-service \

--image eu.gcr.io/$GC_PROJECT/my-dj-app \

--project $GC_PROJECT \

--region "europe-west1" \

--allow-unauthenticated

Flags:

--image: The image to deploy. It's the same image we built in Part 1:.--project: Your Google Cloud project.--region: The physical region where the service will be deployed.--allow-unauthenticated: Ensures you can access the service's URL without passing an authentication header.

You can also Build + Run Container Locally (Optional):

Do this just to test if it works. To build the Docker image from the Dockerfile we created above, execute the commands below:

# Build image

docker build -t my-dj-app .

# Run container

docker run -e PORT=8080 -p 8080:8080 -d --name my-container my-dj-app

# Make request

curl localhost:8080

# Check logs

docker logs my-container

# Stop container

docker stop my-container

You can also Build Locally + Deploy to Cloud Run (Optional):

Do this just to test if it works. Replace $GC_PROJECT with your GCP project.

# Build Docker Image

docker build -t eu.gcr.io/$GC_PROJECT/my-dj-app .

# Push Docker Image to Google Container Registry

docker push eu.gcr.io/$GC_PROJECT/my-dj-app

# Deploy to Image from GCR onto Cloud Run

gcloud run deploy my-service \

--image eu.gcr.io/$GC_PROJECT/my-dj-app \

--project $GC_PROJECT \

--region "europe-west1" \

--platform managed \

--allow-unauthenticated

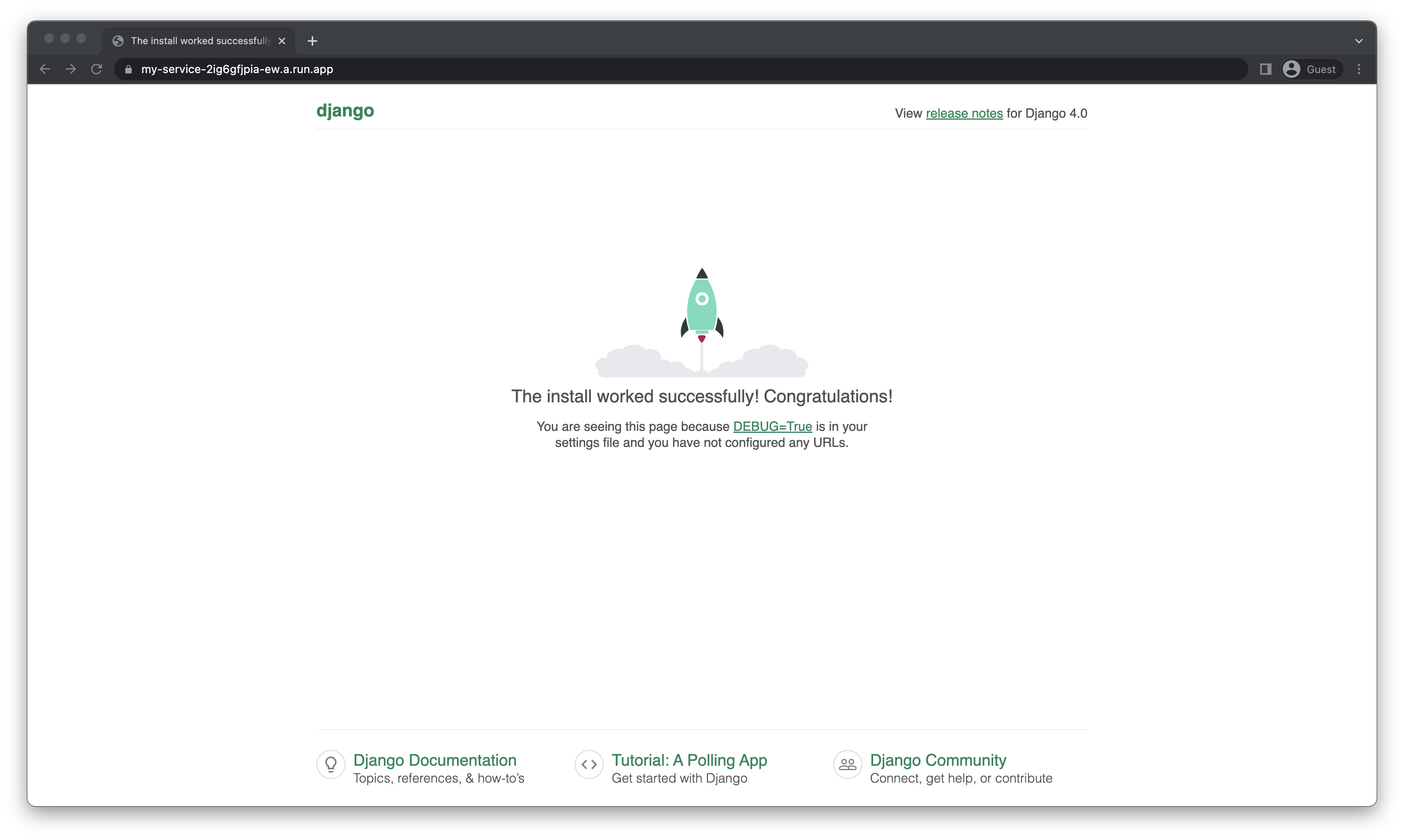

Step 4. Visit Your Cloud Run Web App

Visit the Service URL you got in the above step to view your service. Here’s mine:

Done!

Conclusion

Thank you for reaching the end of the guide!

I hope you learned something.

Sources & More Reading

- https://www.cloudflare.com/en-gb/learning/serverless/what-is-serverless

- https://cloud.google.com/blog/topics/developers-practitioners/lifecycle-container-cloud-run

- https://cloud.google.com/blog/topics/developers-practitioners/cloud-run-story-serverless-containers

- https://python.plainenglish.io/docker-explained-to-a-5-year-old-ffc4e6197fe0

- https://www.ibm.com/cloud/learn/containerization

- https://www.ibm.com/cloud/learn/docker

- https://www.digitalocean.com/community/conceptual_articles/introduction-to-containers

- https://docs.docker.com/samples/django/

- https://cloud.google.com/blog/products/serverless/6-strategies-for-scaling-your-serverless-applications

- https://cloud.google.com/blog/products/serverless/3-cool-cloud-run-features-that-developers-love-and-that-you-will-too

- https://cloud.google.com/blog/topics/developers-practitioners/lifecycle-container-cloud-run

- Application Development with Cloud Run https://www.cloudskillsboost.google/course_templates/371

- https://github.com/ahmetb/cloud-run-faq - Unofficial FAQ and everything you've been wondering about Google Cloud Run.

- https://github.com/steren/awesome-cloudrun